Setting the stage to show the community our technical capabilities and upcoming research, this week the 21CP team exhibited a range of demos at the ICED 2023 conference!

We had three sets of demos up and running for attendees to try:

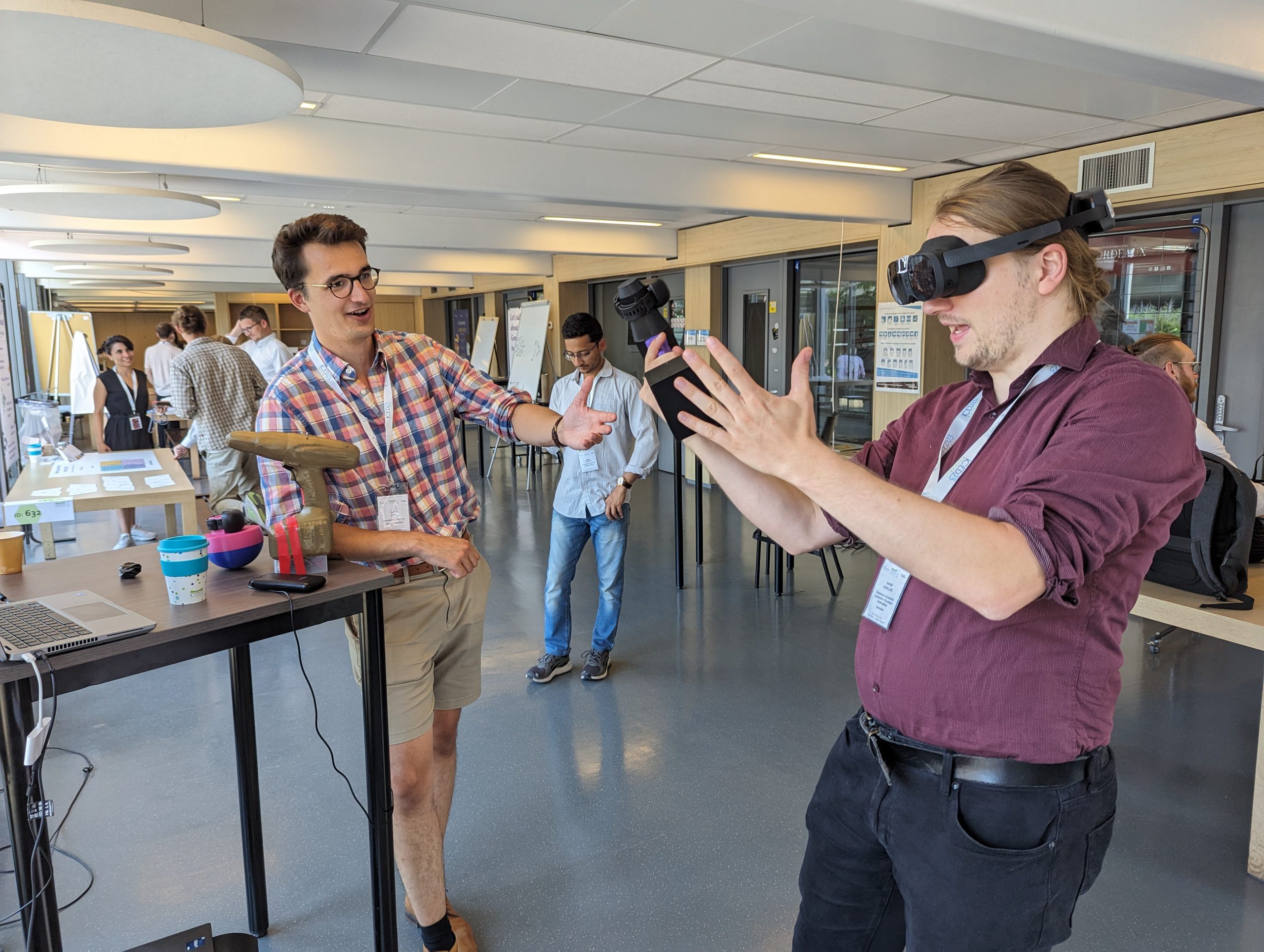

Design in VR: Aman led the charge with a demo showing the different ways that we can design geometry in a 3D world, including 3D sketching, object construction from primitives, VR sculpting, and full parametric design embedded directly in a VR world. The last one is especially exciting – VR design tools so far aren’t parametric and so aren’t compatible with the engineering pipeline. Our system links open parametric design tools to Unity directly, meaning that we can create production-ready geometry with all the benefits of working in VR!

Mixed Reality Design: The two Chris’ demo showed off the potential to bring physical objects into VR design worlds. The first part synchronised physical objects to digital representations, giving people the ability to literally hold the digital objects they can see while also allowing them to instantly edit size, shape, and aesthetic. The second part added in sketching through gesture control, allowing 3D annotation of models and editing of geometry, all controlled directly through physical movement.

High-fidelity, low-cost hand tracking: James led on the final demo, which showed our low cost solution for tracking user interactions with products in real time. Rather than use expensive industry-ready solutions (which actually have fairly major issues), we use a bank a webcams and Mediapipe to track multiple hands in real-time, with mm precision and without worrying about occlusion. Part of the system is a clever machine-learning approach to calibrating that removes all of the headaches we usually have to deal with, which James will run through in another post!

The team had loads of interest, lots of conversations and new connections, took up way too much floor space with loads of trip hazards, and have come back full of ideas for the next pieces of work!

And of course, all the code is available on GitHub for anyone to use.