Physical-Digital Prototyping

This project took place as a summer internship in July and August 2021. The project was looking at how we can combine and synchronise physical and digital prototypes to enable new capabilities using immersive reality technologies and approaches.

Here, we used virtual and augmented reality tracking technologies to synchronise an early-stage physical prototype to a realistic digital version. Once synchronised, users are able to manipulate the physical prototype directly and see their actions replicated on the digital version, giving them a way to interact with an as-final prototype right from earliest stages of the design process.

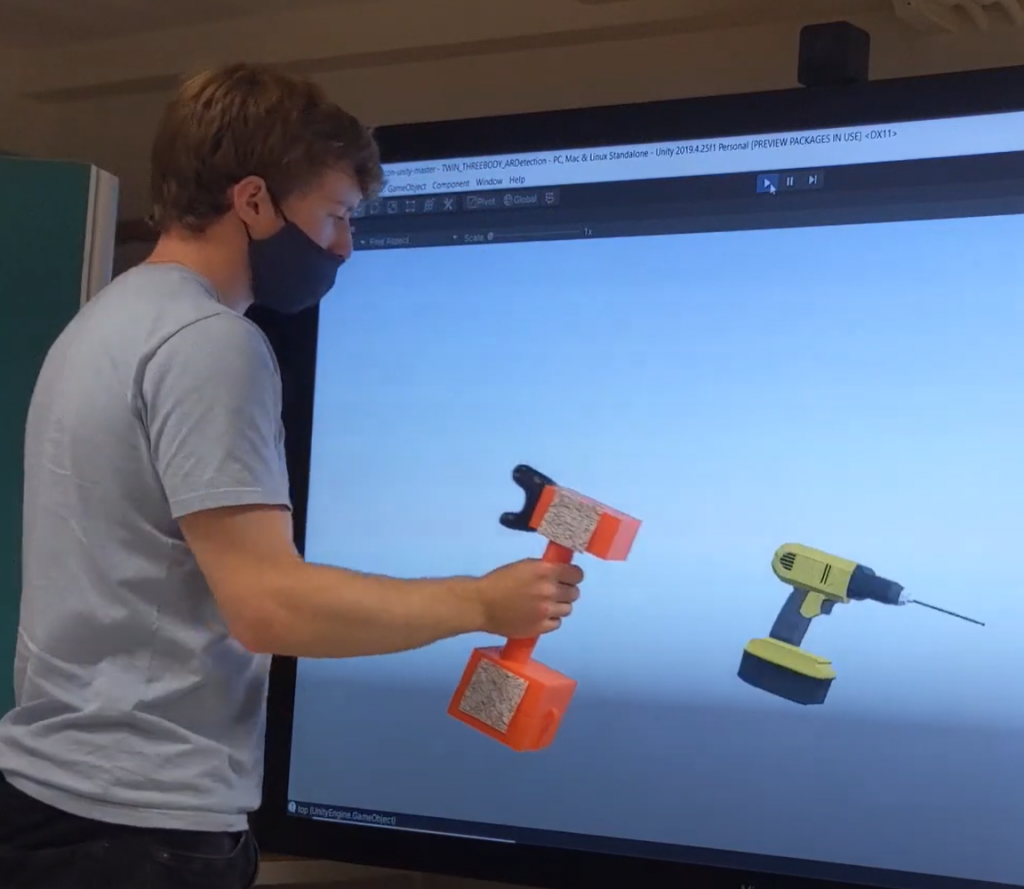

We used a technique known as a digital mirror, where the digital model is synchronised on a screen as if it were a reflection of a physical prototype held in front of it. Users are able to move the physical object while seeing themselves in the screen holding and using the as-final version. They are also able to reconfigure the physical version with new geometry, handles, or parts, and see the changes replicated in as-final form in the reflection while also physically feeling the difference to the object in their hand.

The technology we’ve developed speeds up the process of learning from prototypes, gives non-technical users a more accessible way of providing feedback earlier in the design processes, and allows designers to get more information from lower-fidelity physical prototypes without sacrificing quality.

How it works - Low-fidelity to High-fidelity digital prototyping mirror

All files used to create this system are available on github.

When the Unity program recognises pre-programmed AR image targets, it loads the respective parts into the digital space. 3D tracking is then handled by the VR system, with a HTC tracker attached to the prototype. A high-fidelity digital model is then displayed on a screen, with the real-time tracking and dimensional similarity giving the user the perception of a functional ‘AR Mirror’.

For this example, a 3D printed drill has been split into three parts. There are AR targets on the head and bottom, while the handle acts as an anchor and is always included. Users can replace the different components with different versions to test their impact on product usability, like different ergonomic grips, or different masses in the head and bottom to test mass distribution.

Model functionality: All pieces attach to one another with basic interference ‘dovetail’ joints. AR image targets are stuck on with tape and the VR tracker is attached using a screw glued to the 3D printed part. The base of the drill is hollow and can be split in two using the snap joints. This allows the weight of the base to be customised at will.

Digital Functionality: Sections of the drill can be customised, with keyboard inputs leading to random colours being generated in each section. The program reveals parts of the drill when specific image targets are shown. Currently, there are targets for the base and the main body of the drill.

Accuracy and errors: Once running with the correct values the program exhibits few significant errors. The two main contributors to errors are calibration and dimensional accuracy.

Calibration can be split into rotational calibration and positional calibration. Positional calibration is done initially by placing the prototype at a predetermined location from the screen. Rotational calibration is done by eye, matching the angle of movement in the Unity scene to the real world.

Dimensional accuracy can be split into the screen size and the model size. The screen size is inputted in the Projection Plane gameobject according to the monitor dimensions, this is done by hand. The AR mirror effect is strongest when the size of the digital and physical models corresponds reasonably accurately, so the scale of the digital model must be tweaked (or if from CAD should be fine).

If view from tracker to lighthouses is blocked responsiveness will decrease, the model will drift and then movement will stop. Otherwise, the program is reliable, with excellent responsiveness and no tracking issues.

Future Outlook: Physical / digital prototyping is a priority work area for the group, and we’re keen to develop this and future technologies over the next few years. Our current plans include extending the physical and digital reconfiguration and manipulation options for the prototypes, different technologies for synchronising and representing the digital version, the introduction of new immersive technologies such as haptics and gesture control, and integrating lightweight real-time analytics to give further understanding of the prototypes’ performance.

People

Will Mullins

Researcher

Lee Kent

Researcher

Chris Snider

Senior Lecturer

Name

Role

Blog Posts

TURA investigators visit Bristol

From the 21st to the 24th June, I was very glad to host some of the co-investigators (Dr. Aleksandra Kristikj, Dr Giacomo Barbeiri and Associate Professor Freddy Zapata) from our WUN funded, Transdisciplinary Urban Agriculture (TURA) project in Bristol where we progressed work on the project and visited a range of agricultural sites in Bristol […]

UK-Colombia collaboration and WUN bid submission

I returned to visit la Universidad de Los Andes (Uniandes) in August this year after a pandemic induced hiatus. Prior to 2020, we had been working with Uniandes to develop proposals to do with agritech and transdisciplinary engineering. During the pandemic we were able to manage some collaboration through project clean access (leading to a […]

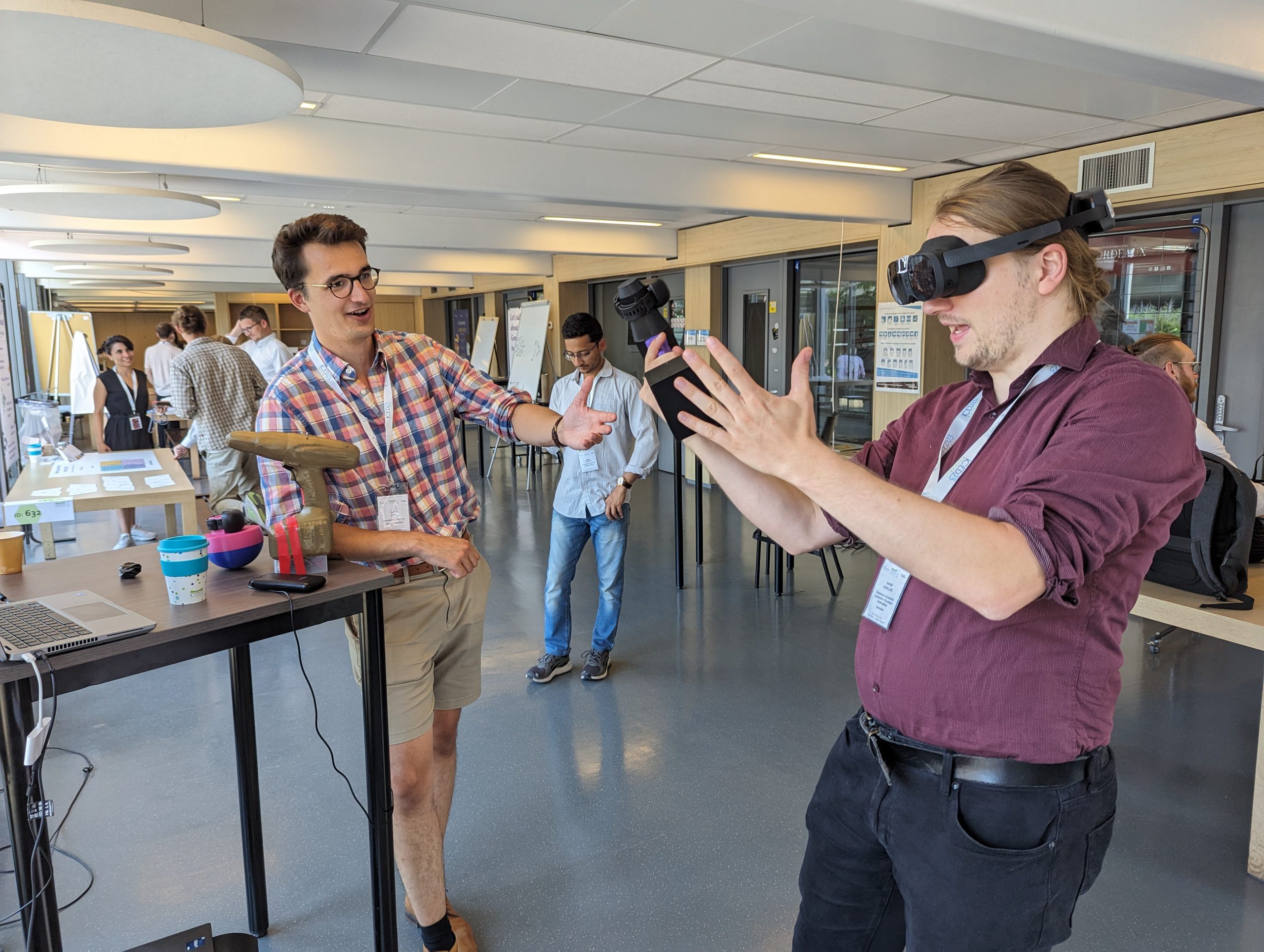

21st Century Prototyping exhibit at ICED 2023

Setting the stage to show the community our technical capabilities and upcoming research, this week the 21CP team exhibited a range of demos at the ICED 2023 conference! We had three sets of demos up and running for attendees to try: Design in VR: Aman led the charge with a demo showing the different ways […]

IDEA challenge 2023

The IDEA challenge returned in May 2023 for its third iteration! This time it was hosted by the University of Zagreb and building on the success of the the challenge’s first two iterations was set to be bigger and better than ever! The challenge saw teams working fully virtually to develop a CubeSat satellite that […]

21st Century Prototyping at the ProSquared Network+ Launch

This week Chris and the team joined the launch event for the new ProSquared Network on the Democratisation of Digital Devices. This 5-year network aims to connect industry and academia to find new ways to overcome production barriers at lower volumes, by making design, prototyping, and manufacture faster and more efficient. Doing so will be […]

DMF @ DCC’22

The DMF attended the tenth International Conference on Design Cognition and Computing (DCC’22) in Glasgow and received the best poster prize and runner up in the best paper award!

Technical Debt – No Time To Pay

In recent years there has been a lot of talk about technical debt in various fields. Technical debt is the cost that an individual or a group of people will incur in future for hasty decisions in the present, which are often motivated by lack of resources and/or time. Photo by Scott Umstattd on Unsplash […]

Facemask to Filament: 3D Printing with Recycled Facemasks

Photography courtesy of Peter Rosso As a first line of defence against the spread of COVID-19 the facemask, a simple covering worn to reduce the spread of infectious agents, has affected the lives of billions across the globe. An estimated 129 billion facemasks are used every month, of which, most are designed for single use. […]

Demystifying Digital “X” – ICED Conference Paper

The DMF lab recently (remotely) attended the International Conference of Engineering Design (ICED) 2021 to present seven papers. One of these, authored by Chris Cox, Ben Hicks and James Gopsill, investigates the new language surrounding the paradigm shift towards digital engineering. This presentation was shown at ICED 2021 as part of the “Digital Twins” panel, […]