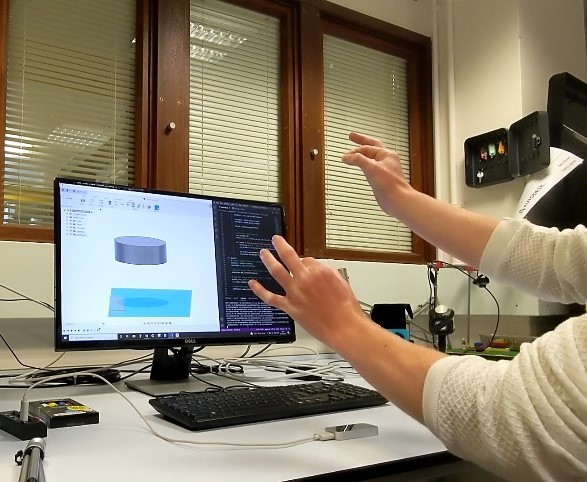

Gesture-controlled CAD

This project took place as part of a summer internship in 2021.

The initial goal of the project was to replicate the outcome of previous applications of hand tracking in Computer Aided Design. Namely the navigation interface demonstrated by Elon Musk and SpaceX (link) as well as an integration demonstrated by Autodesk (link). The development of this platform using the Fusion API and Leap Motion SDK would allow for the lab to conduct further research in this area in future.

Once previous applications had been replicated, new ones could then be developed, built upon and their suitability investigated.

The Gesture Control System

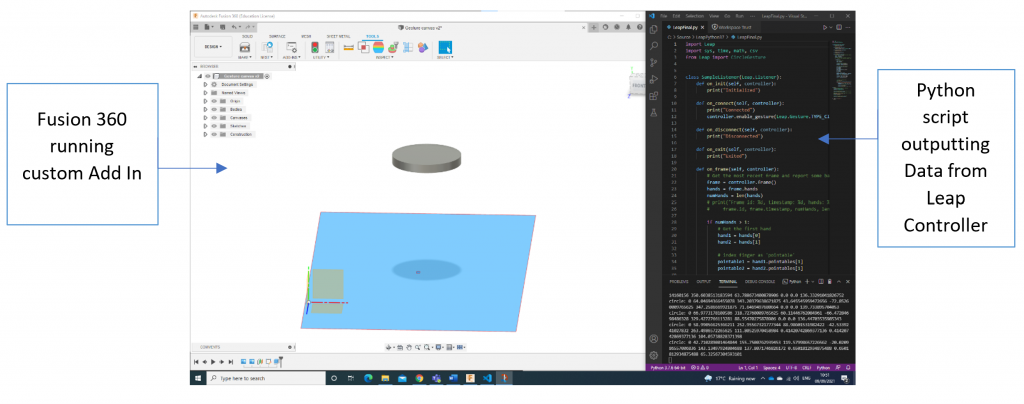

Data from the Leap Motion device was sent to a Fusion 360 add-in to create a real time interface. This was achieved by constantly updating a CSV file that could be read by the Fusion API. This was sufficient in this case, but a more robust solution may be required for more complex interfaces.

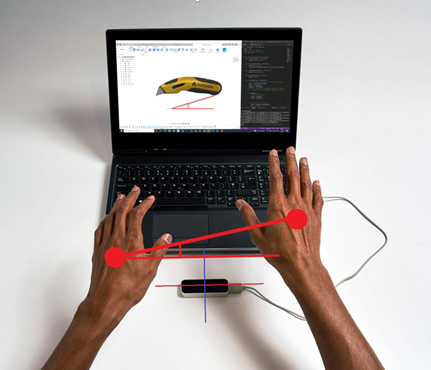

Visualisation applications were achieved by movement of the camera within Fusion 360. The most successful of which was the adjustment of the roll, yaw and zoom of the model simultaneously. These parameters could be manipulated either by using one hand – the roll and yaw of the hand and height of the hand controlling the zoom – or two hands where the orientation of the model is as if it were between the two hands; this was an incredibly intuitive way to interact with the model.

Next was geometry creation, this was a much harder challenge as very little work had been previously conducted in this area. The nearest was a Virtual Reality CAD project that used a game engine to create geometry and sent the data to the Fusion API for a model to be created. (link) This goal for this project was to not only send the data to Fusion but provide a real time interface to visualise result.

The application of this interface is most useful in the early geometric design phase, where the rough geometry and structure of the model is created. The ability to quickly express concepts leads to fast iteration and accelerated solution-finding.

There is a balance between workflow benefit and accuracy. Geometry could be created step-by-step but provide little benefit over a mouse whereas the final outcome allows a body’s size and location to be chosen with one gesture. The disadvantage of this is that it is hard to balance all these variables at once. It is especially hard due to the 3D geometry from a 3D gesture being displayed on a 2D screen with fixed camera angle. A future participant study could investigate the nuances of this and help to design a more intuitive interface.

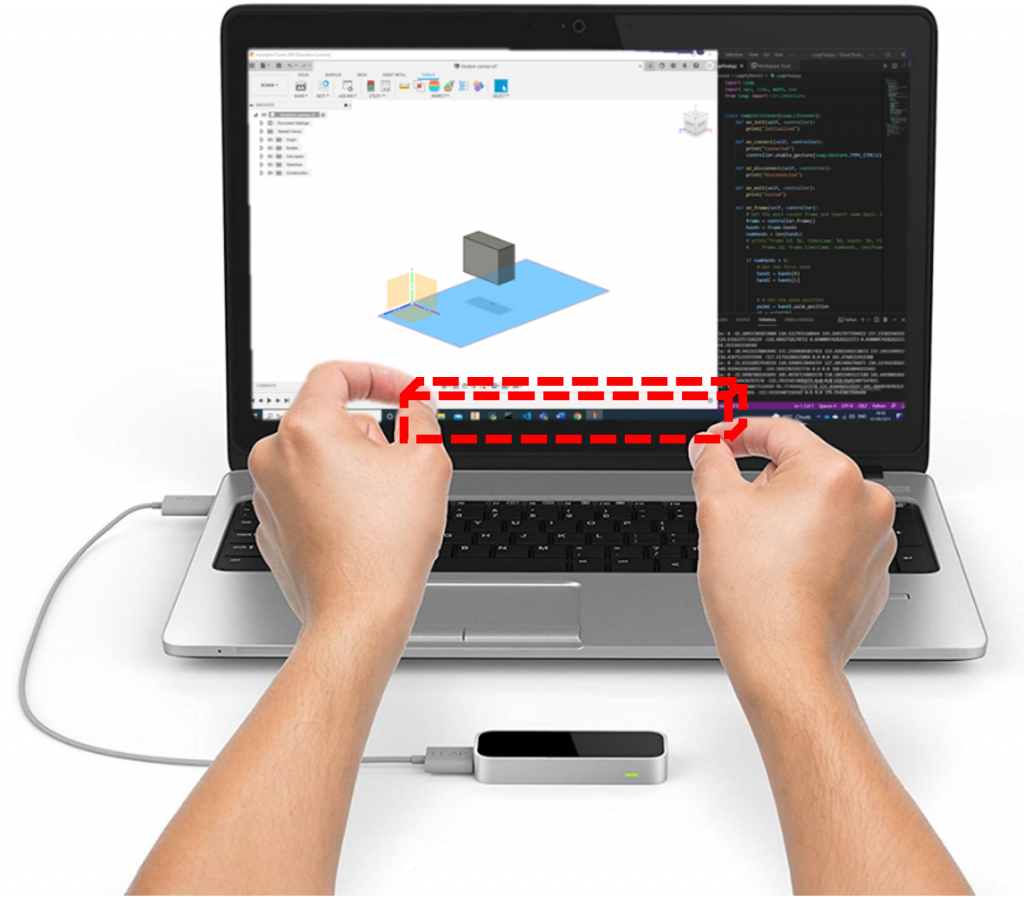

Towards the end of this project, a paper was published discussing the best gestures for a CAD interface. (link) Many of the ideas in this paper had already been implemented but it helped to refine the outcome and provide a framework to work from. This influenced the decision to first generate a unit body at the origin and then use a pinch gesture with two hands to rescale and reposition the body. Once positioned, the pinch gesture could be released, and the body turned green to show confirmation.

The final outcome is usable and, in some cases, results in faster model creation than with a mouse and keyboard. There are, however, a few drawbacks that must be considered and solved for it to be a truly useful interface. The responsiveness of the interface is inconsistent, this can be frustrating with hands held in the air for an extended period of time. Occasionally, one hand may be occluded by the other so that the Leap Controller cannot detect it. Finally, there is a relatively small working area making it difficult to create larger and more complex models.

Next Steps for the Project

There is plenty of opportunity to optimise the code, particularly in the communication between the Leap Python script and the Fusion API. Gesture suitability could also be investigated further using the aforementioned paper as a framework (link) and a participant study. This project serves proof of concept to demonstrate the feasibility of such an interface.

To solve the problem of occluded hands, multiple Leap Motion controllers could be used. This is something that is becoming more widespread, but it is unlikely that the Leap Python SDK will have support for it as it is already multiple versions behind the SDK for Game Engines such as Unity. This project could involve interaction with a game engine and result in some interesting VR applications with CAD. (link)

There is potential for future Fusion API development as it was not designed for real time interfaces such as this. If it can be demonstrated to be of use, there is the motivation for certain aspects of the API to be improved by Autodesk for a more streamlined interface.

This project has demonstrated the feasibility and limitations of a gesture-based CAD interface. It will hopefully spark further research that can lead to a novel interface that makes interaction with CAD software more accessible and more efficient.

It has been a great experience to collaborate in person in the lab after working on remote projects for the past year. Working on something that is new, exciting and has real applications is why I chose a career in engineering, and this has shown me that working in industry is not the only way to do this. It has given me an interesting insight into the field of research and the challenges that come with it.

Lloyd Cleary-Richards

People

Lloyd Cleary-Richards

Researcher

Chris Snider

Senior Lecturer

Blog Posts

TURA investigators visit Bristol

From the 21st to the 24th June, I was very glad to host some of the co-investigators (Dr. Aleksandra Kristikj, Dr Giacomo Barbeiri and Associate Professor Freddy Zapata) from our WUN funded, Transdisciplinary Urban Agriculture (TURA) project in Bristol where we progressed work on the project and visited a range of agricultural sites in Bristol […]

UK-Colombia collaboration and WUN bid submission

I returned to visit la Universidad de Los Andes (Uniandes) in August this year after a pandemic induced hiatus. Prior to 2020, we had been working with Uniandes to develop proposals to do with agritech and transdisciplinary engineering. During the pandemic we were able to manage some collaboration through project clean access (leading to a […]

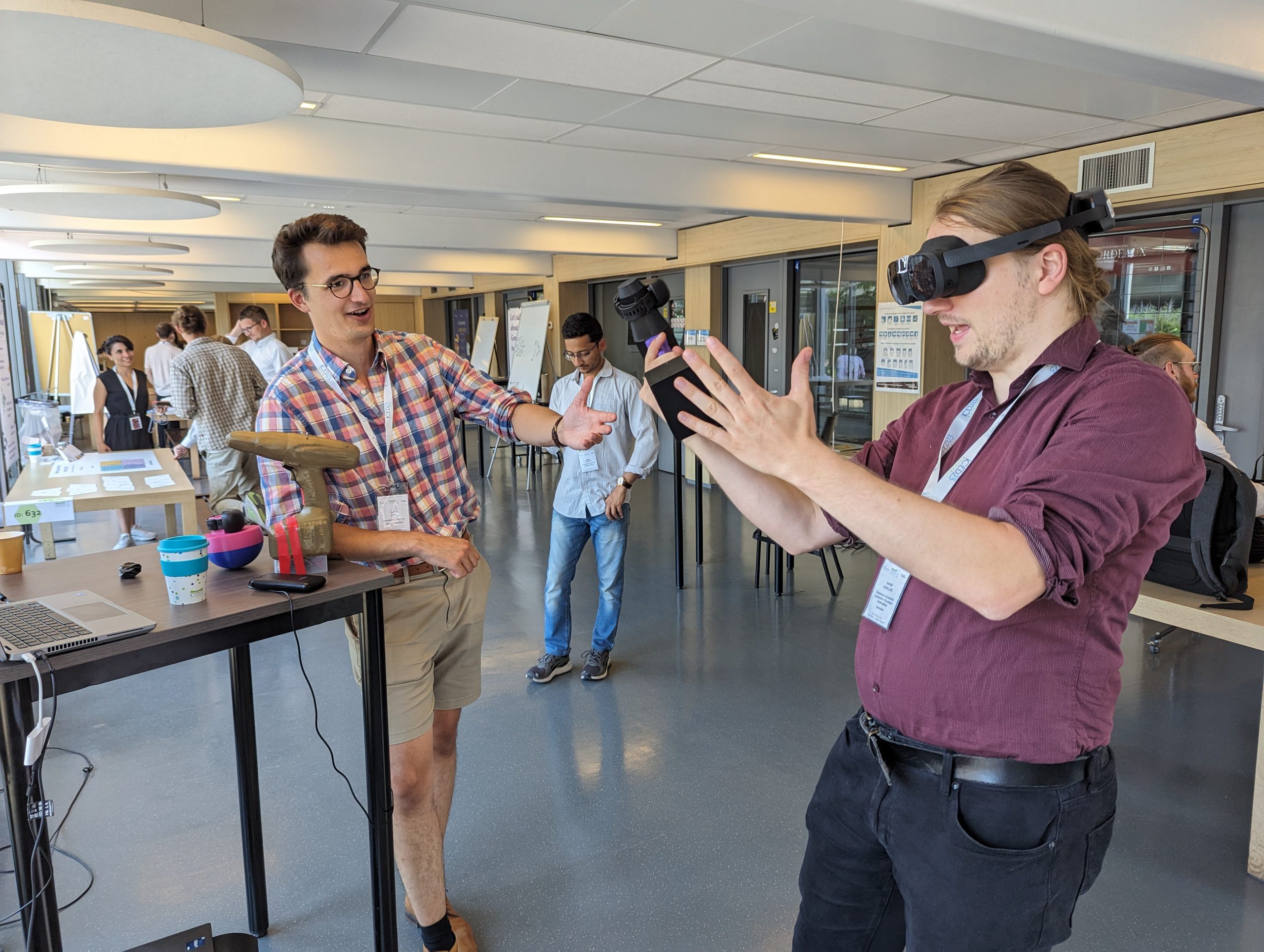

21st Century Prototyping exhibit at ICED 2023

Setting the stage to show the community our technical capabilities and upcoming research, this week the 21CP team exhibited a range of demos at the ICED 2023 conference! We had three sets of demos up and running for attendees to try: Design in VR: Aman led the charge with a demo showing the different ways […]

IDEA challenge 2023

The IDEA challenge returned in May 2023 for its third iteration! This time it was hosted by the University of Zagreb and building on the success of the the challenge’s first two iterations was set to be bigger and better than ever! The challenge saw teams working fully virtually to develop a CubeSat satellite that […]

21st Century Prototyping at the ProSquared Network+ Launch

This week Chris and the team joined the launch event for the new ProSquared Network on the Democratisation of Digital Devices. This 5-year network aims to connect industry and academia to find new ways to overcome production barriers at lower volumes, by making design, prototyping, and manufacture faster and more efficient. Doing so will be […]

DMF @ DCC’22

The DMF attended the tenth International Conference on Design Cognition and Computing (DCC’22) in Glasgow and received the best poster prize and runner up in the best paper award!

Technical Debt – No Time To Pay

In recent years there has been a lot of talk about technical debt in various fields. Technical debt is the cost that an individual or a group of people will incur in future for hasty decisions in the present, which are often motivated by lack of resources and/or time. Photo by Scott Umstattd on Unsplash […]

Facemask to Filament: 3D Printing with Recycled Facemasks

Photography courtesy of Peter Rosso As a first line of defence against the spread of COVID-19 the facemask, a simple covering worn to reduce the spread of infectious agents, has affected the lives of billions across the globe. An estimated 129 billion facemasks are used every month, of which, most are designed for single use. […]

Demystifying Digital “X” – ICED Conference Paper

The DMF lab recently (remotely) attended the International Conference of Engineering Design (ICED) 2021 to present seven papers. One of these, authored by Chris Cox, Ben Hicks and James Gopsill, investigates the new language surrounding the paradigm shift towards digital engineering. This presentation was shown at ICED 2021 as part of the “Digital Twins” panel, […]