Written by Mike Wharton, one of our excellent summer interns.

I’m now about a third of the way through my project, having dived head-first into the world of haptics. There were a number of interesting tangents and discoveries along the way, and unexpected links to psychology and audio equipment.

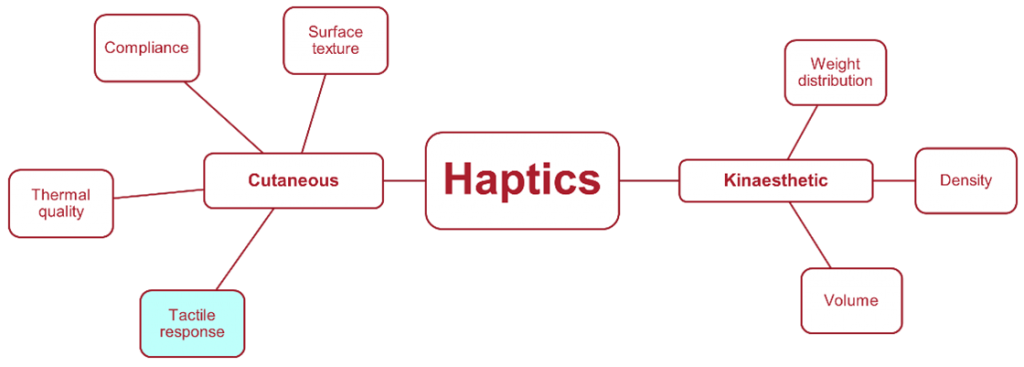

Haptic or tactile means “related to touch”, and these experiences involving touch can be split into two main categories:

The first, cutaneous, means “related to skin” and includes all the thermal and mechanical receptors in the skin, these are most densely populated on your fingertips and face.

The second, known as kinaesthetic, is related to the sense which detects your body position, weight, and muscle movement using mechanical receptors in muscles, tendons, and joints.

When you hold any object there are a number of senses utilised in order to characterise its position and qualities, and touch constitutes a small part of this hierarchy. Every object you hold is instantly characterised by your brain without realising, often even before you have touched it.

Cutaneous cues come from surface texture, compliance, thermal qualities, and tactile responses. Kinaesthetic cues come from the weight distribution, density, and volume. Existing research has explored trying to replicate these qualities for use in prototypes, and in fact I’ve spoken to quite a few academics here in the DMF research group who have done just that.

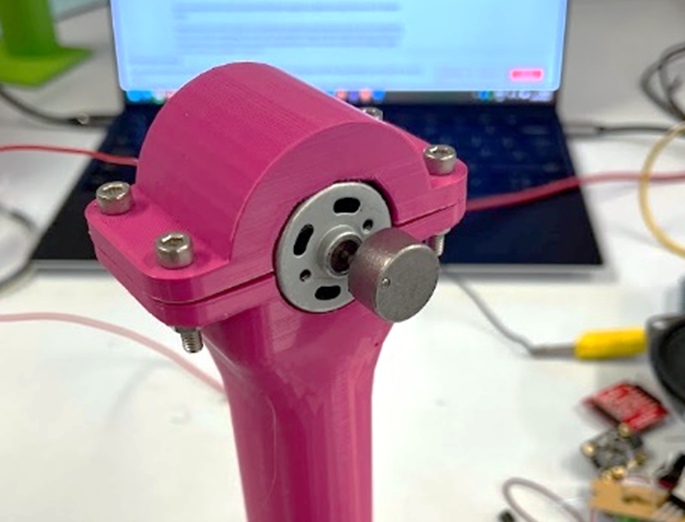

This project set out to purely explore the tactile response element of interactions – so for example this would include the use of vibration motors, compliant mechanisms or buttons, ideally with an elegantly standalone integration, to see if they make prototypes feel more realistic.

There are three main types of vibration motors; piezoelectric, Eccentric Rotating Mass (ERM), and Linear Resonance Actuator (LRA). Piezo motors are good in that they can simultaneously detect pressure and create a response, however they are an expensive new technology requiring high voltages. As a result this project mainly explores a mixture of ERM and LRA motors. ERMs work on a similar principle to a brick in a washing machine, whereas LRA motors rely on coils and magnets to push and pull a diaphragm and/or mass back and forth, similarly to a typical loudspeaker. This means that ERMs have an element of ‘spooling up’ and ‘spooling down’, requiring a slightly higher activation voltage before they settle into a repeatable buzz. LRAs on the other hand are incredibly precise, I found their spool up times to be almost negligible for the small motors I’ve been using – under 1 millisecond. One thing to bear in mind with these is that they need to be told when to move back and forth whereas a DC ERM motor can quite happily spin given a current, so there is more capability for advanced effects with LRA motors (although these might still be possible with small ERMs). As well as programming your own using an Arduino or equivalent, you can use a DRV2605 chip to get more interesting effects with both motor types.

ERMs are much cheaper and more readily available than LRA motors, and it’s surprising how much punch they can pack into such a small housing. I experimented with a 12V ERM motor which probably had enough inertia to vibrate a chair, so it’s good that the upper limit has been reached.

Most, in fact almost all that I’ve found, smartphones use LRA motors carefully calibrated to resonate (hence the name) such that they can convincingly and efficiently produce haptic effects. One of my suspicions going into this project was that a lot of this technology is in-house proprietary research or confidential intellectual property, and I think this is probably unfortunately true, for example one popular phone brand with one of the largest haptic engines uses a proprietary rectangular mass controlled by three coils to allow for side-to-side oscillations.

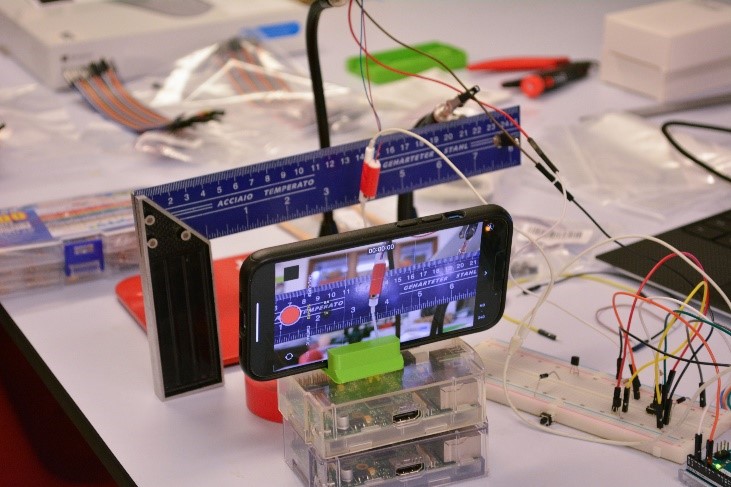

To characterise motors I have gone through a number of different tests, I’m particularly proud that I came up with the idea of affixing a contact microphone to freely supported motors, allowing me to accurately determine vibration frequencies by plotting a Fourier spectrum of the recorded audio using an open-source, free, audio-processing software called Audacity. I was initially planning to use photogrammetry (getting data from video cameras) but there were lots of issues with off axis displacements, high frequencies, and also particularly for LRA motors, often a lack of inertial displacement altogether. I will however blow the dust off the laser vibrometer over the next few weeks too, so possibly there will be more interesting findings there.

Real objects may have a number of different interacting frequencies so it remains to be seen just how much complexity of vibration is required to trick the brain into believing animation of the prototype or object you are holding. I suspect it is actually not very much. Sight and sound are the dominant senses and the amount of information you get from touch is actually significantly less. Many modern laptops have touch-pads which don’t depress at all, rather simply using the compression of your fingertip and an LRA motor to trick your brain. I even wonder if the use of purely sound will be as convincing if not more than vibrations, after all the two are incredibly interconnected. It has been shown that touch can even be used as a pseudo-hearing-aid for deaf people. Furthermore, a loudspeaker in itself is an LRA motor – maybe I’ll try cutting one up and possibly even adding masses to it, or maybe the sound itself will provide convincing mechanoreceptive and auditory vibrations at the same time.

More holistic links between neurology, psychology, and engineering are particularly interesting to me; one of the joys of research is that it doesn’t discriminate, it leads you to explore what turns out to be an incredibly multidisciplinary reality. See you in a few weeks.